Nvidia Rubin AI Architecture Launch: Faster Training and Inference

- Covertly AI

- 4 days ago

- 3 min read

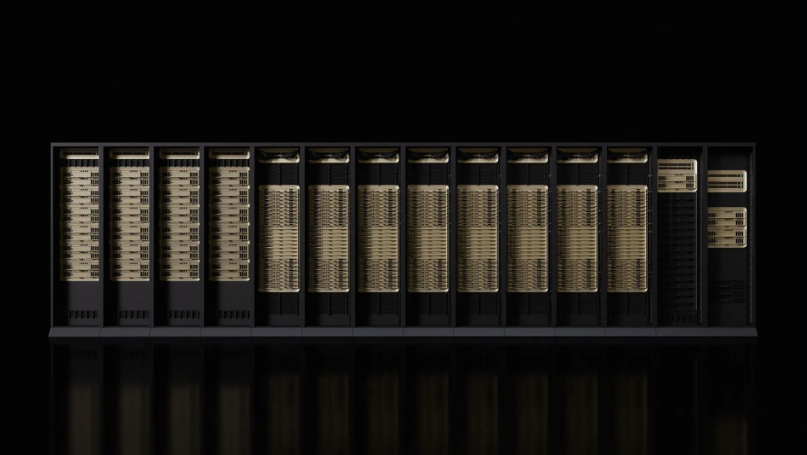

Nvidia has unveiled its next major leap in artificial intelligence hardware with the official launch of its Rubin computing architecture at the Consumer Electronics Show.

Announced by CEO Jensen Huang, Rubin is positioned as Nvidia’s most advanced AI platform to date, designed to confront the rapidly growing computational demands of modern AI systems. Production of the architecture is already underway, with further ramp up expected in the second half of the year, signaling Nvidia’s intent to move quickly as demand for AI infrastructure accelerates worldwide (TechCrunch).

First revealed in 2024, Rubin continues Nvidia’s fast paced hardware evolution, replacing the Blackwell architecture, which itself succeeded Hopper and Lovelace. This relentless development cycle has played a key role in turning Nvidia into one of the most valuable companies in the world. Huang emphasized that Rubin was built to address a fundamental challenge facing the industry: the explosive growth in compute requirements driven by increasingly capable and complex AI models. According to Huang, the architecture is already in full production, underscoring Nvidia’s confidence in its readiness for large scale deployment (Yahoo Finance).

Named after astronomer Vera Florence Cooper Rubin, the Rubin architecture is composed of six interconnected chips designed to work together as a unified system. At its core is the Rubin GPU, but Nvidia has expanded the architecture to tackle persistent bottlenecks beyond raw compute. Enhancements to Bluefield networking and NVLink interconnects aim to improve data movement, while a new Vera CPU has been introduced to support agentic reasoning and long running, autonomous AI workflows. This holistic design reflects Nvidia’s growing focus on entire AI systems rather than standalone processors (Mezha).

One of the most notable advancements in Rubin is its approach to memory and storage. Nvidia’s senior director of AI infrastructure solutions, Dion Harris, highlighted the increasing strain placed on key value cache memory by newer AI workloads, particularly those involving agent based systems and extended tasks. To address this, Nvidia has introduced a new external storage tier that connects directly to the compute device, allowing organizations to scale storage more efficiently without overwhelming on chip resources. This change is intended to support more sophisticated AI applications that rely on persistent context and long term reasoning (Yahoo Finance).

Performance gains are another central feature of the Rubin platform. Nvidia reports that Rubin delivers approximately three and a half times the speed of Blackwell for model training and about five times the performance for inference tasks, reaching peak levels of up to 50 petaflops. In addition to raw speed, the architecture offers major improvements in energy efficiency, providing roughly eight times more inference compute per watt. These gains are critical as data centers face growing power constraints alongside rising demand for AI capabilities (TechCrunch).

Rubin is entering the market at a time of intense global competition to build AI infrastructure. Nearly every major cloud provider is expected to deploy Rubin chips, including partners such as Anthropic, OpenAI, and Amazon Web Services. The architecture will also power large scale scientific systems like HPE’s Blue Lion supercomputer and the forthcoming Doudna supercomputer at Lawrence Berkeley National Laboratory. Nvidia estimates that total spending on AI infrastructure could reach between 3 trillion and 4 trillion dollars over the next five years, and Rubin positions the company at the center of this massive investment wave (Mezha).

This article was written by the Covertly.AI team. Covertly.AI is a secure, anonymous AI chat that protects your privacy. Connect to advanced AI models without tracking, logging, or exposure of your data. Whether you’re an individual who values privacy or a business seeking enterprise-grade data protection, Covertly.AI helps you stay secure and anonymous when using AI. With Covertly.AI, you get seamless access to all popular large language models - without compromising your identity or data privacy.

Try Covertly.AI today for free at www.covertly.ai, or contact us to learn more about custom privacy and security solutions for your business.

Works Cited

Mezha. “Nvidia Introduces Rubin AI Architecture for Enhanced Computing Performance.” Mezha, 2026, https://mezha.net/eng/bukvy/nvidia-introduces-rubin-ai-architecture-for-enhanced-computing-performance/.

TechCrunch. “Nvidia Launches Powerful New Rubin Chip Architecture.” TechCrunch, 5 Jan. 2026, https://techcrunch.com/2026/01/05/nvidia-launches-powerful-new-rubin-chip-architecture/.

Yahoo Finance. “Nvidia Launches Powerful Rubin Chip.” Yahoo Finance, 2026, https://finance.yahoo.com/news/nvidia-launches-powerful-rubin-chip-221658470.html.

Cha, Ariana Eunjung. “Nvidia CEO Jensen Huang Delivers Some Good News for Investors at CES.” MarketWatch, www.marketwatch.com/story/nvidia-ceo-jensen-huang-delivers-some-good-news-for-investors-at-ces-4b3a3c7a.

Khollam, Aamir. “Nvidia Unveils Rubin AI Platform at CES 2026.” Interesting Engineering, interestingengineering.com/ai-robotics/nvidia-rubin-ai-platform-ces-2026.

“Nvidia Chips and Mercedes.” The New York Times, video, www.nytimes.com/video/us/100000010626065/nvidia-chips-mercedes.html.

.png)

Comments